导语

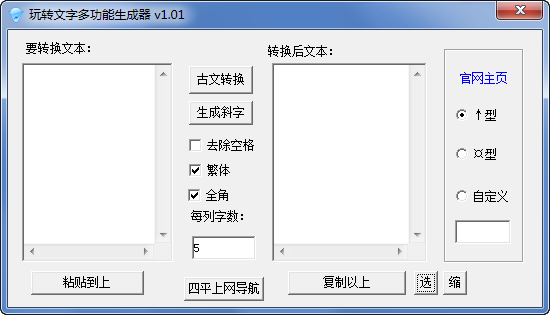

我的前同事 Medcl 大神繁体字转换工具,在 github 上也创建了一个转换简体及繁体的分词器。这个在我们的很多的实际应用中也是非常有用的,比如当我的文档是繁体的繁体字转换工具,但是我们想用中文对它进行搜索。

安装

我们可以按照如下的方法来对这个分词器进行安装:

./bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-stconvert/releases/download/v8.2.3/elasticsearch-analysis-stconvert-8.2.3.zip你可以根据发行的版本及自己的 Elasticsearch 版本来选择合适的版本来安装。

安装完这个插件后,我们必须注意的是:重新启动 Elasticsearch 集群。我们可以使用如下的命令来进行查看:

./bin/elasticsearch-plugin list$ ./bin/elasticsearch-plugin listanalysis-stconvert

该插件包括如下的部分:

它还支持如下的配置:

keep_both:默认为 false

delimiter:默认是以 , 为分隔符

我们使用如下的例子来进行展示:

PUT /stconvert/{"settings": {"analysis": {"analyzer": {"tsconvert": {"tokenizer": "tsconvert"}},"tokenizer": {"tsconvert": {"type": "stconvert","delimiter": "#","keep_both": false,"convert_type": "t2s"}},"filter": {"tsconvert": {"type": "stconvert","delimiter": "#","keep_both": false,"convert_type": "t2s"}},"char_filter": {"tsconvert": {"type": "stconvert","convert_type": "t2s"}}}}}

在上面,我们创建一个叫做 stconvert 的索引。它定义了一个叫做 tscovert 的 analyzer。如果你想了解更多关于如何定制 analyzer,请阅读我之前的文章 “Elasticsearch: analyzer”。

我们做如下的分词测试:

GET stconvert/_analyze{"tokenizer" : "keyword","filter" : ["lowercase"],"char_filter" : ["tsconvert"],"text" : "国际國際"}

上面的命令显示:

{"tokens" : [{"token" : "国际国际","start_offset" : 0,"end_offset" : 4,"type" : "word","position" : 0}]}

我们可以使用如下的一个定制 analyzer 来对繁体字来进行分词:

PUT index{"settings": {"analysis": {"char_filter": {"tsconvert": {"type": "stconvert","convert_type": "t2s"}},"normalizer": {"my_normalizer": {"type": "custom","char_filter": ["tsconvert"],"filter": ["lowercase"]}}}},"mappings": {"properties": {"foo": {"type": "keyword","normalizer": "my_normalizer"}}}}

我们使用如下的命令来写入一些文档:

PUT index/_doc/1{"foo": "國際"}PUT index/_doc/2{"foo": "国际"}

在上面,我们定义了 foo 字段的分词器为 my_normalizer,那么上面的繁体字 “國際” 将被 char_filter 转换为 “国际”。我们使用如下的命令来进行搜索时:

GET index/_search{"query": {"term": {"foo": "国际"}}}

它返回的结果为:

{"took" : 1,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 2,"relation" : "eq"},"max_score" : 0.18232156,"hits" : [{"_index" : "index","_id" : "1","_score" : 0.18232156,"_source" : {"foo" : "國際"}},{"_index" : "index","_id" : "2","_score" : 0.18232156,"_source" : {"foo" : "国际"}}]}}

如果我们对它进行 term 搜索:

GET index/_search{"query": {"term": {"foo": "國際"}}}

它返回的结果为:

{"took" : 0,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 2,"relation" : "eq"},"max_score" : 0.18232156,"hits" : [{"_index" : "index","_id" : "1","_score" : 0.18232156,"_source" : {"foo" : "國際"}},{"_index" : "index","_id" : "2","_score" : 0.18232156,"_source" : {"foo" : "国际"}}]}}

我们甚至可以结合之前我介绍的 IK 分词器来对繁体字进行分词:

PUT index{"settings": {"analysis": {"char_filter": {"tsconvert": {"type": "stconvert","convert_type": "t2s"}},"analyzer": {"my_analyzer": {"type": "custom","char_filter": ["tsconvert"],"tokenizer": "ik_smart","filter": ["lowercase"]}}}},"mappings": {"properties": {"foo": {"type": "text","analyzer": "my_analyzer"}}}}

在上面,我们先对繁体字进行繁体到简体的转换,然后使用 ik 分词器对它进行分词,之后在进行小写。我们使用如下的命令来进行测试:

GET index/_analyze{"analyzer": "my_analyzer","text": "我愛北京天安門"}

上面命令的返回结果是:

{"tokens" : [{"token" : "我","start_offset" : 0,"end_offset" : 1,"type" : "CN_CHAR","position" : 0},{"token" : "爱","start_offset" : 1,"end_offset" : 2,"type" : "CN_CHAR","position" : 1},{"token" : "北京","start_offset" : 2,"end_offset" : 4,"type" : "CN_WORD","position" : 2},{"token" : "天安门","start_offset" : 4,"end_offset" : 7,"type" : "CN_WORD","position" : 3}]}

我们还可以做另外一个测试:

GET index/_analyze{"analyzer": "my_analyzer","text": "請輸入要轉換簡繁體的中文漢字"}

结果是:

{"tokens" : [{"token" : "请","start_offset" : 0,"end_offset" : 1,"type" : "CN_CHAR","position" : 0},{"token" : "输入","start_offset" : 1,"end_offset" : 3,"type" : "CN_WORD","position" : 1},{"token" : "要","start_offset" : 3,"end_offset" : 4,"type" : "CN_CHAR","position" : 2},{"token" : "转换","start_offset" : 4,"end_offset" : 6,"type" : "CN_WORD","position" : 3},{"token" : "简繁体","start_offset" : 6,"end_offset" : 9,"type" : "CN_WORD","position" : 4},{"token" : "的","start_offset" : 9,"end_offset" : 10,"type" : "CN_CHAR","position" : 5},{"token" : "中文","start_offset" : 10,"end_offset" : 12,"type" : "CN_WORD","position" : 6},{"token" : "汉字","start_offset" : 12,"end_offset" : 14,"type" : "CN_WORD","position" : 7}]}

嗨,互动起来吧!

喜欢这篇文章么?

欢迎留下你想说的,留言 100% 精选哦!

限 时 特 惠: 本站每日持续更新海量各大内部创业教程,加站长微信免费获取积分,会员只需38元,全站资源免费下载 点击查看详情

站 长 微 信: thumbxmw